DALL·E 2 Inpainting and Outpainting

Open AI’s Dall·E 2 gives you the ability to edit generated content, and expand to paint outside the generated content. It offers both inpainting and outpainting. Let’s explore how it works.

Introduction

New to DALL·E 2? See the DALL·E 2 Review and Demo which covers how it works and what it can do. This post will assume knowledge of DALL·E 2 basics.

What is inpainting? Using the edit function, you can erase part of the image, and when a new generation frame request is made, any transparent parts of the square are re-generated or inpainted.

What is outpainting? DALL·E 2 output is typically 1024 x 1024 px, but using the edit function and placing the generation frame outside of the original image will generate outpainted content.

The two techniques are not independent so they can be used together.

Edit Screen

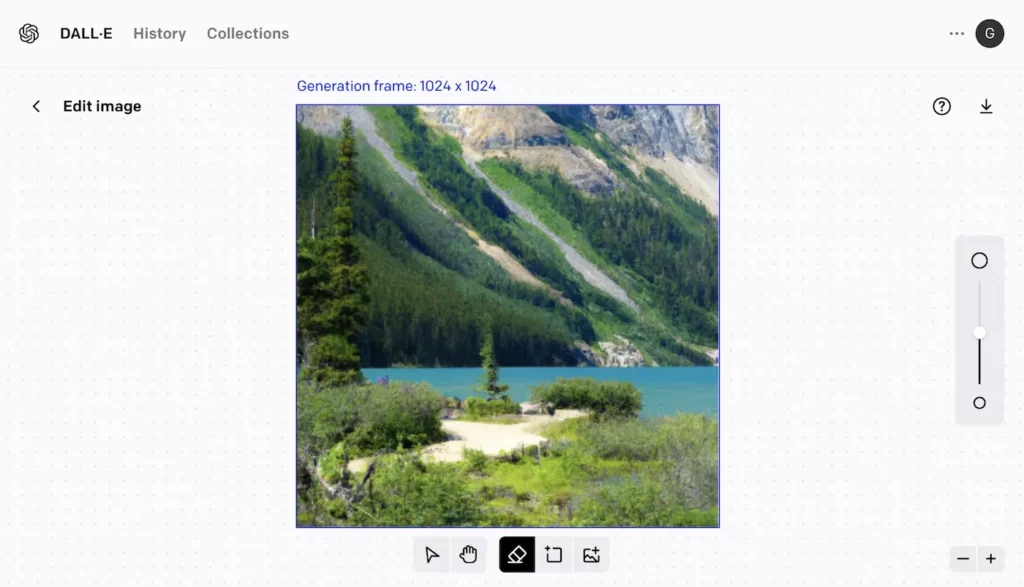

The Edit screen is used to inpaint and outpaint images with DALL·E 2. You have two ways to access this screen.

The first way to access the edit screen is from the DALL·E 2 landing screen. Select an image result set from your history on the right, or enter a prompt and generate a new results set. In the results set, click on the image to edit and on the image detail / single image screen, click on the Edit button.

The second way to access the edit screen is from the DALL·E 2 landing screen, click on upload an image under the prompt input field. You will be prompted to upload an image and make any cropping adjustments.

Either approach will result in the image loaded in the Edit screen, with a small tool bar at the bottom and tool size widget on the right. These tools should look pretty familiar to anyone who has used an image editor, but let’s review them.

Arrow – Moves the generation frame (blue box). DALL·E 2 will attempt to generate content in the transparent elements in this box. Transparency is represented by the grey and white grid.

Hand – Pans around the screen area. Can also be activated by holding down the space bar.

Eraser – Will remove any content, replacing it with transparency. Size is controlled by the slider widget on the far right.

Box with plus – Add generation frame. This brings up the generation frame.

Box with image and plus – Upload an image to the editor.

Editing Tips

When editing, it’s recommended to overlap the generation frame with existing content so that DALL·E 2 will be able to create consistent content.

Outpainted images are not saved automatically,so it’s recommended to download your work as you go to avoid losing content. Only the individual generated frames from outpainted images are saved in the history.

Each prompt submitted uses one credit, even if you are only generating small elements of a frame.

Demos

“Show, don’t tell.” For a firsthand look at what DALL·E 2 can do, take a look at these demos of inpainting and outpainting; you’ll often see the two techniques used together. The images are currently not stored on the DALL·E 2 site, so lower resolutions are provided here.

Rocky Mountains – Inpainting & Outpainting

Prompt:

Scenic picture of the Rocky Mountains from the shore of a crystal blue lake, 4k, detailed, landscape photo

This image started with the centre generated frame and was built out. On the far left and right you can see where DALL·E 2 started to struggle generating new and detailed content. This is one of the problems with outpainting.

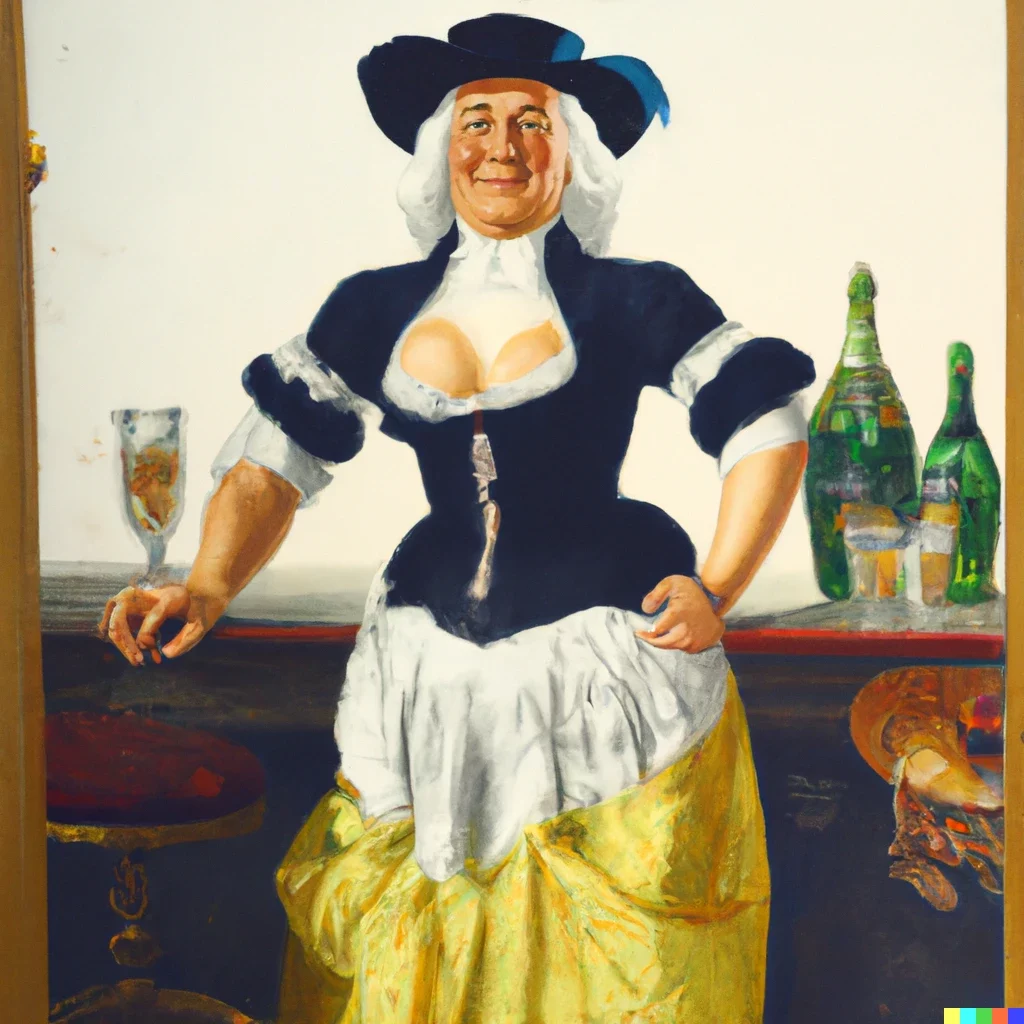

Quaker Oats Man – Outpainting

This prompt was an attempt to recreate a funny outpainting by Dardan Aeneas that started with the character from the Quaker Oats logo. The logo was edited to erase everything except the head and uploaded to the DALL·E 2 editor.

Prompt:

1800s painting of a buxom woman wearing skimpy clothes at a bar

Original at labs.openai.com Reddit Post

I attempted to use the same source image and same prompt to see if the output was the same or similar. In my experience, the results can vary wildly from generation to generation.

Unfortunately, it looks like the word ‘skimpy’ now triggers the content warning policy, so ‘skimpy’ was replaced with ‘revealing’.

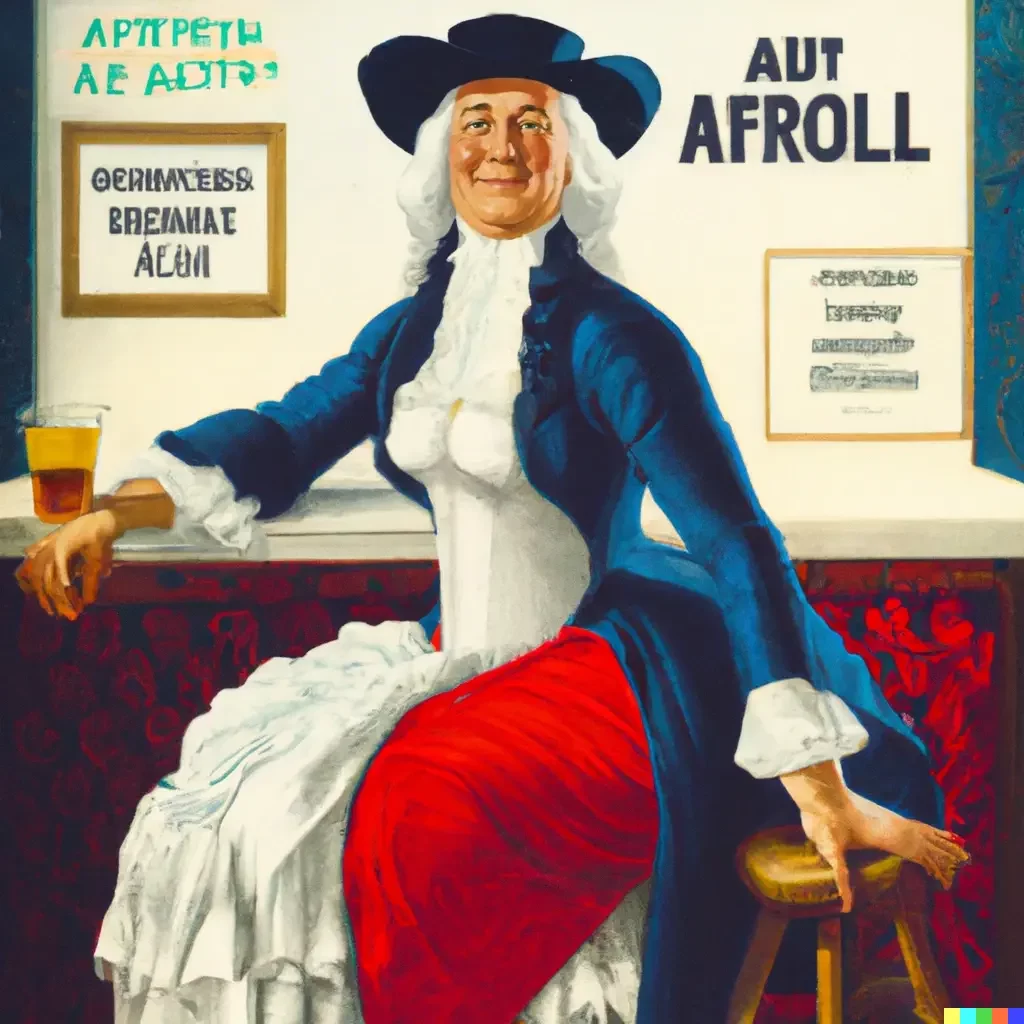

Prompt:

1800s painting of a buxom woman wearing revealing clothes at a bar

The results were not bad: this was the best of the set, without any inpainting or changes. The problems with words and sentences are typical.

Fantasy Scene – In and Outpainting

Prompt:

A digital illustration of Lothlorien houses on treetops with fireflies, 4k, detailed, trending in artstation, fantasy vivid colors, organic and bent

I struggled with the outpainting the further I got away from the center/original image. Typical issues include limited details, and the color starts to blow out to one extreme. It is almost as if DALL·E 2 just loses interest!

1950s Holiday Post Card – In and Outpainting

Prompt:

1950s color illustration of christmas

The approach here was just to observe outpainting a different genre. It could be considered seasonally appropriate.

Conclusions

Editing generated images. The inpainting is useful to correct issues in generated images by erasing and regenerating.

Control output by providing input. You can control a bit of the generation by uploading images like text, people or objects, and get DALL·E 2 to fill in around them. This could work as a sort of collage effect, as long as you provide DALL·E 2 with original work to steer the generation.

Drift of generated content. For some larger images, there is an issue with consistency with the rest of the image. There is a tendency to ‘drift’ as you work away from the first generated image. It is important to overlap with existing images, so that DALL·E 2 has something to work from – and even then it won’t necessarily fix the drift.

Using DALL·E 2 to inpaint or outpaint allows more creative use of DALL·E 2, providing a way to steer the generation. In this way it is possible to collaborate or craft the DALL·E 2 generation, rather than acting as a passive user.